Teleoperation, or remote operation, allows a human operator to control a robot or machine using tools such as a remote control, joystick, or virtual controller. It is a foundational technology in modern robotics, enabling robots to be deployed immediately in complex environments and laying the groundwork for future autonomous systems. While the term tele traditionally implies control over long distances, teleoperation can occur over both short and long ranges, depending on the application.

This technology is particularly vital in situations where autonomous robots are not yet feasible or where human precision and adaptability are essential. For example, teleoperated robots are extensively used in scenarios too dangerous, inaccessible, or remote for humans, such as disaster zones or deep-sea exploration. In robotic surgery, teleoperation ensures precision and real-time responsiveness, while teleoperated caregiver robots can replicate nuanced human interactions to provide empathetic care.

Building Blocks of a Teleoperation Systems

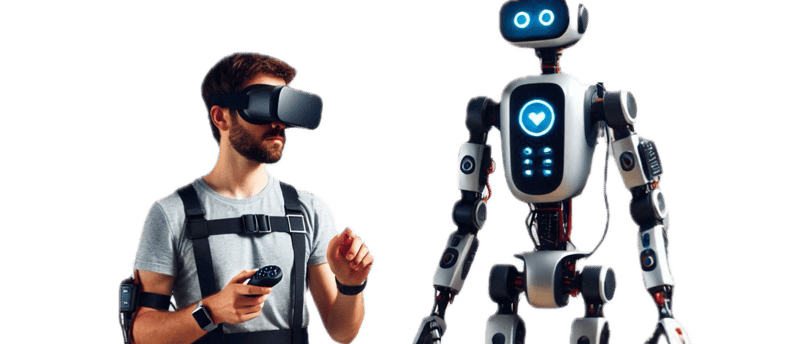

A teleoperation system comprises several components. Typically, a human would control a robot using a control interface to send commands that the robot will execute, while the human operator receives data from the robot sensors (like cameras) in real-time. First of all, it requires a device that the human operator can use to interact with the robot [1]. This device must allow the user to easily and effectively control the robot movements. If the use case involves dexterity, the control mechanism must be able to perform precise movements. Devices like joysticks, gamepads, or haptic gloves are typically used. Also, emerging technologies, such as augmented reality (AR) systems paired with advanced feedback mechanisms are enabling immersive teleoperation experiences. These innovations provide not only intuitive controls but also real-time sensory information. The need of intuitive interfaces is especially important in high-risk environments where the operator might not be an experienced teleoperator, but an expert in hazardous tasks such us handling explosives or radioactive materials.

Teleoperation systems also rely on low-latency, high-bandwidth communication channels to ensure real-time command execution and sensory feedback to minimize the operational disruption. Ideally, a latency of 200 ms should be guaranteed for an optimal performance while a significant reduction in throughput is observed for values higher than 500 ms [2].

Finally, tactile sensors can be added to teleoperation systems, which can enhance the user experience by providing haptic feedback to the operator, thereby helping with dexterity and manipulation tasks

In the video below, we can see an example of high-quality teleoperation technology (XRGO) that uses a VR headset to control any robotic embodiment, developed by SRI International.

Recently, open-source solutions for teleoperation have also been proposed as shown in the video below

Data collection

Teleoperation is also a valuable mean of collecting useful data. Current machine learning models need a considerable amount of training samples. Generating data for robotics is a big challenge, compared to other areas like computer vision or natural language processing, where a collection of images or texts can suffice. In robotics, however, we need to rely on data that precisely captures the steps that the robot need to follow in order to accomplish a specific task. Although recent advances in Artificial Intelligence have motivated researchers to train robots using examples extracted from videos [3], it is still unclear whether this approach can be truly successful.

As a result of this, teleoperation is still the best means to collect robotic data for multiple reasons. First, when teleoperating a robot, we are explicitly demonstrating a set of movements that has to be carried out to accomplish a task. Moreover, when the same task is conducted by different operators, we can make sure that our data captures different and valid approaches for solving the task, which translates into less specific data. We can also instruct operators to include additional restrictions that can make the data more robust. For instance, in the case of a robotic arm picking an object, we can ask them to not always follow the most direct and short trajectory but, instead, using other trajectories that can be useful in a real scenario where some obstacles can impede the movements of the arm.

Another benefit of using teleoperation for robotic data collection is that we can remove the need of transforming data from the joint arrangements of the human arm into the joint arrangement of the robot, and then, the extended range of motion of the robotic arm becomes available.

Finally, there are other several aspects that have to be considered when collecting data using teleop, like data augmentation, which heavily determine the performance of the models [4].

There have been several innovative projects recently exploiting teleoperation for robotic data collection. For example, we can see how data collection through teleoperation is performed in the Dexterity hub project. Several humanoid robotic companies are also relying on teleoperation to improve their workflows and evaluate robot performance. Below is an example video:

Training Robotic AI Through Learning from Human Demonstrations

One of the most straightforward approaches that relies on data obtained during teleoperation is based on building an AI model that is inspired by how a human expert solves a specific task. This approach is called learning from human demonstrations or imitation learning and, in some cases, it is related to reinforcement learning methods. Nevertheless, the main difference is that it does not require to deal with complex reward functions as it is the case for most reinforcement learning techniques since the rewards from the model can be somehow extracted from the expert demonstrations.

As an example of these techniques, we can cite behavioral cloning, an approach based on imitating (cloning) the actions available on the data generated by the human operator. Behavioral cloning models are easy to train and they provide high accuracy, since the model is basically trained to mimic the behavior of a human expert performing the task. However, one of their main drawbacks is that they can not adequately generalize to new tasks [5]. The model can struggle even when addressing tasks that are slightly different from the tasks captured in the training data.

This lack of generalization is not exclusive of behavioral cloning and it is one of the main drawbacks of other models for dexterous manipulation. In the last years, new approaches have been proposed which can enhance the model generalization capabilities. Techniques like diffusion policy [6] or variational autoencoders [7] encourage diverse action generation, thereby reducing the overfitting issues. Furthermore. a new family of models, Vision Language Action (VLA) models have recently attracted the attention of the robotic research community. These models are trained on massive datasets of images, text, and robotic actions, which enable them to learn complex relationships between visual scenes, natural language instructions, and the appropriate robotic responses. This results in more versatile models. Teleoperation plays an important role for VLA model training since the action data is typically acquired this way [8]. In addition to this, one of the advantages of the aforementioned models is that they only require a small number of expert demonstrations as training data. This reduces the cost of data collection through teleoperation.

In another school of thought, people believe that haptic feedback is crucial for robots to learn to effectively manipulate real-world objects with high dexterity. A recent study highlights how tactile feedback significantly improves task performance, allowing robots to mimic nuanced human-like manipulation [9].

In future posts we will describe some of these models and how they can be used to teach dexterity to a robot.